Mapping and 3D Representations

Before starting anything, a lot is taken from the one and only Cyrll Stachniss lectures. Link to the lecture .

Point Clouds

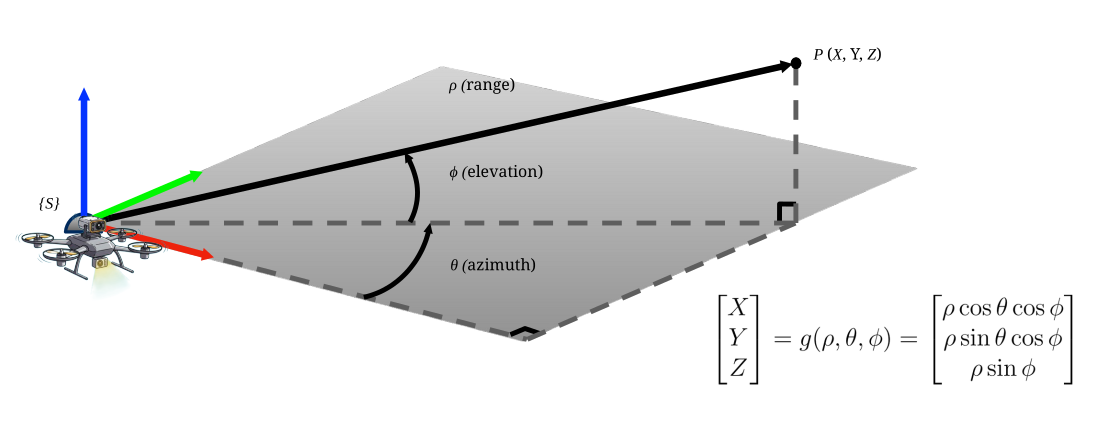

A point cloud is one of the most fundamental ways to represent a 3D environment. It is essentially a large collection of points where each point has an

These points are often generated by sensors like LIDAR, which measures distance (

- Pros:

- No data discretization is required, so the original measurement precision is maintained.

- The mapped area is not limited by a predefined grid size.

- Cons:

- Memory usage can be unbounded as more points are added.

- There is no explicit representation of free or unknown space, only surfaces where the sensor light reflects are recoreded.

Operations on Point Clouds

The basic operations of translation and rotation can be combined. A common sequence to transform a point cloud

For each point

Where:

is the translation vector. is the rotation matrix. is a scaling matrix.

Feature Extraction from Point Clouds

A raw point cloud is just a collection of coordinates.

To make it useful, we often need to extract higher-level features from it, like planes, spheres, or cylinders. This process of identifying geometric primitives is a form of segmentation.

Point Cloud Accumulation

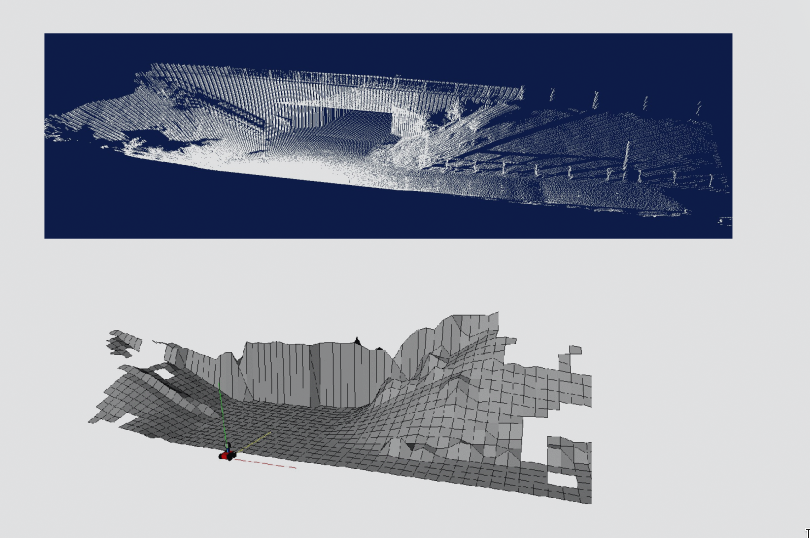

A single LIDAR scan only provides a point cloud from one perspective. To build a complete map, multiple point clouds taken from different robot poses must be combined into a single, global reference frame {G}. This process is called accumulation. ( Usually we take a defined global frame, or just the first frame )

If we have a point cloud

For each point

By applying this transformation to all points, we can merge multiple scans to create a dense, large-scale map of the environment.

Plane Fitting for Road Detection

A common task for an autonomous vehicle is to identify the road surface from a LIDAR point cloud. We can model the road as a simple plane and then fit this model to the point cloud data.

The equation of a plane in 3D is:

Our goal is to find the parameters

For a set of

We can stack all

Where:

Now to find the best-fit parameters

To find the minimum, we take the partial derivative with respect to

This gives us the normal equations:

We can then solve for the optimal parameters

This gives us the parameters

Voxel Grids

While point clouds are a direct representation of sensor data, they can be inefficient for tasks like collision checking.

Voxel grids address this by discretizing 3D space into a grid of volumetric elements, or voxels.

- Pros:

- Volumetric representation of space.

- Constant access time.

- Allows probabilistic updates.

- Cons:

- Memory requirements can be very high, as the entire volume of the map is allocated.

- The map's extent must be known beforehand.

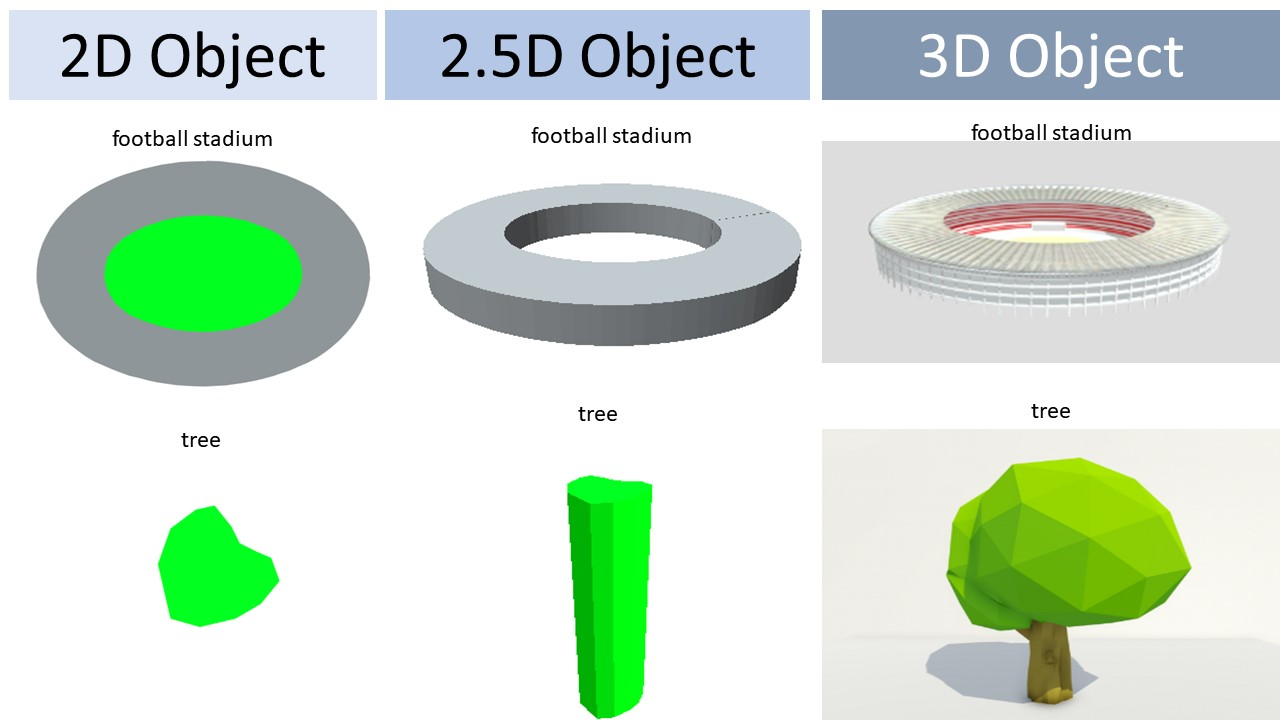

2.5D Maps / Height Maps

A common simplification of a full 3D voxel grid is a 2.5D map, often called a height map. Instead of storing a full 3D grid, a 2.5D map is a 2D grid where each cell stores a single height value, typically representing the highest point of an object within that cell's column.

Random Link: 2.5-D-Scene-Representation

A simple way to generate this is to average all the scan points that fall into a given (x,y) grid cell.

This representation is very memory efficient and provides constant-time access, but it's non-probabilistic and cannot distinguish between free and unknown space.

Elevation Maps

An elevation map is a more sophisticated, probabilistic version of a height map. Instead of just storing an average height, each cell stores a probabilistic estimate of the height, often updated using a Kalman filter. This allows the map to represent uncertainty, which typically increases with the measured distance from the sensor.

- Pros:

- Memory efficient 2.5D representation.

- Provides a probabilistic estimate of height.

- Cons:

- Cannot represent vertical objects or multiple levels (like a bridge over a road).

Multi-Level Surface (MLS) Maps

To overcome the single-level limitation of elevation maps, Multi-Level Surface (MLS) maps can be used. An MLS map allows each 2D cell to store multiple surface "patches". This enables the representation of complex vertical structures like bridges and underpasses.

Each patch in a cell consists of:

- The height mean,

- The height variance,

- A depth value,

TODO: Add more here, conversion from LIDAR to MLS .

Octrees (OctoMap)

While voxel grids are powerful, their memory usage is a significant drawback, especially in 3D where most cells are empty.

The Octree is a hierarchical data structure that efficiently addresses this problem by only allocating memory for volumes as needed.

An Octree works by recursively subdividing 3D space. A root node represents the entire volume. If this volume is not homogeneous (i.e., not entirely occupied, free, or unknown), it is subdivided into eight children nodes ("octants"), each representing a sub-volume. This process continues until a minimum voxel size is reached or a node's volume is homogeneous.

-

Pros:

- It is a full 3D model that is memory efficient.

- Can be updated probabilistically using a log-odds representation, just like occupancy grids.

- It is inherently multi-resolution, allowing for queries at different levels of detail.

- Open Source available here

-

Cons:

- The implementation IS tricky.

TODO: Probabilistic map updates, Kalman Filter pre-context and Bayes pre-context for adding more content.

Signed Distance Functions (SDF)

A Signed Distance Function (SDF) is way to represent geometry implicitly. An SDF is a function

- The sign of the function indicates whether the point is inside (negative) or outside (positive) the surface.

- The surface itself is the set of all points where the function is zero, known as the zero level-set.

For example, the SDF for a sphere of radius

A key property is that the gradient of the SDF,

Occupancy Grids

An occupancy grid is a specific type of voxel grid where each cell

The map is updated sequentially using a Bayesian filter. Given a new measurement

This can be computationally expensive. A more efficient way to handle this is to use the log-odds representation. The odds of a cell being occupied are

The update then becomes a simple addition:

Here,

Probabilistic Map Update (Deeper Dive)

The occupancy of each voxel in a grid,

The update rule in log-odds form is efficient and avoids numerical instability near probabilities of 0 or 1.

-

Clamping Policy: To prevent probabilities from becoming permanently fixed at 0 or 1 (making them impossible to update later), a clamping policy is often used. This keeps the log-odds value within a certain range, for example,

. This ensures the map can adapt to dynamic changes in the environment. -

Multi-Resolution Queries: In hierarchical structures like OctoMaps, the belief of a parent node (a larger voxel) can be quickly determined from its children. A common approach is to set the parent's occupancy to the maximum occupancy value of its eight children. This allows for fast queries at different levels of detail.